Introduction

Understanding the TASK score is important because it provides insight into strengths and areas where improvement is needed. This information can be used to make informed decisions about how to improve.

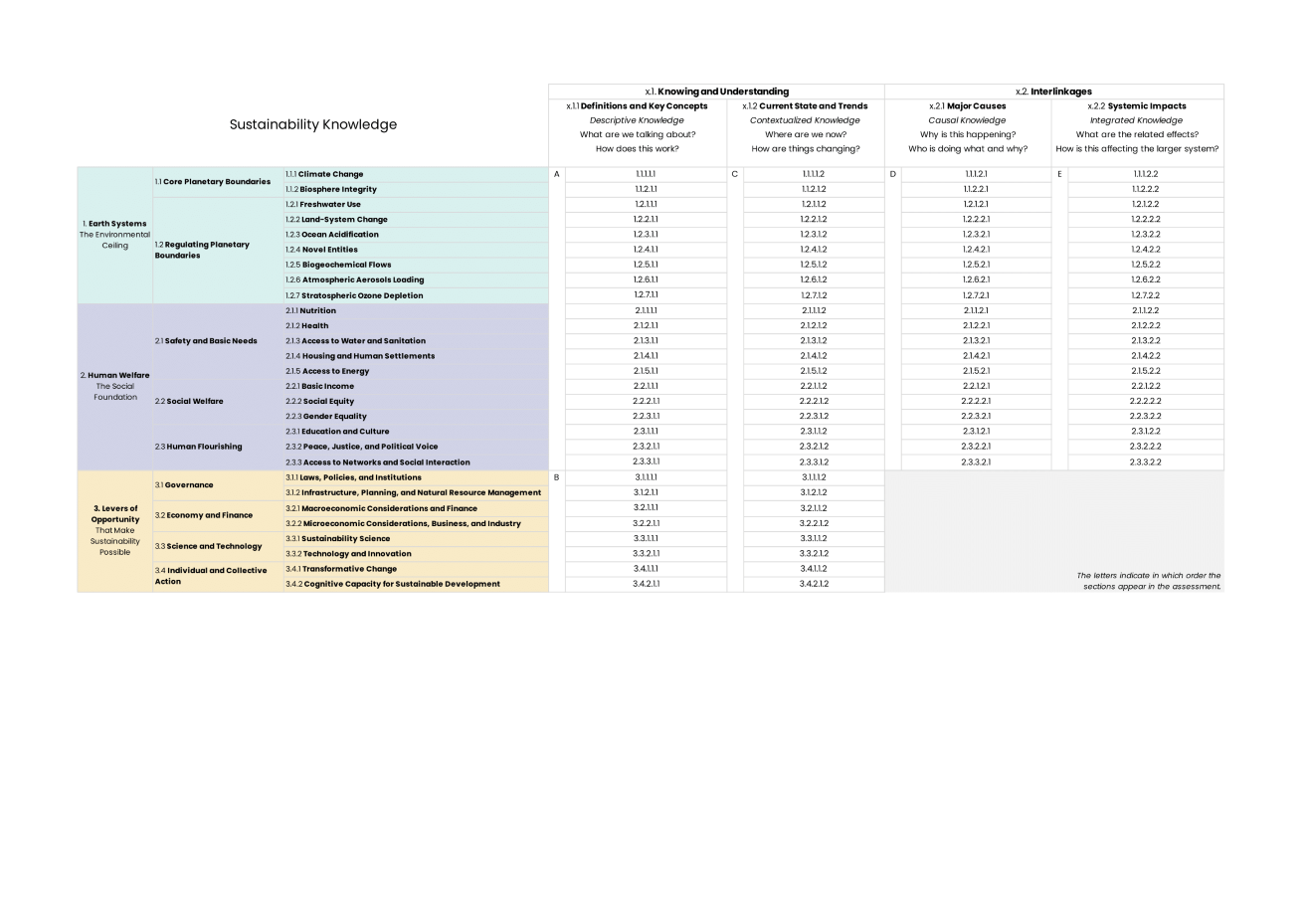

The scores are divided into Categories and follows the matrix defined by the Sulitest Task Force.

- Earth Systems —

- Core Planetary Boundaries

- Regulating Planetary Boundaries

- Human Welfare —

- Safety and Basic Needs for all

- Social Welfare for people

- Elements that contribute to Human Flourishing

- Levers of Opportunity —

- Governance

- Economy and Finance

- Science and Technology

- Individual and Collective Action

Earth Systems and Human Welfare frameworks contain the following Categories which allow to track the type of sustainability knowledge:

Knowing and Understanding | Interlinkages |

Descriptive knowledge | Causal knowledge |

Contextualized knowledge | Integrated knowledge |

Levers of opportunity does not contain interlinkages questions due to the fact that interlinkages are by nature present in the framework’s subject.

Results

Overall score calculation

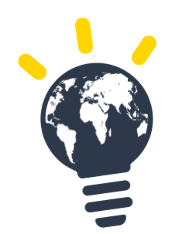

The overall score is calculated by doing the average of all scores within the red rectangles.

Because there are more boxes of Human Welfare and Earth Systems, averaging of Frameworks/Domains/Subjects does not equal to the overall score

Because there are more boxes of Knwoing and Understand than Interlinkages, averaging Knowledge Types does not equal to the overall score

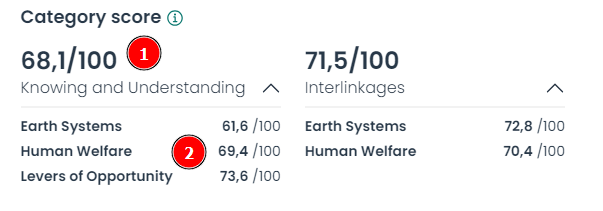

Category score

- This reflect the average score over the Category

Example for Knowing and Understanding:

(61.6+69.4+73.4)/3=68.2

- This is the details of the Knowing and Understanding per Framework

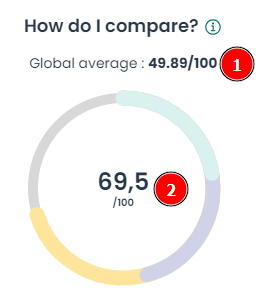

Overall result

- This is the global average at the time of the session score calculation

- This is the overall score for the candidate showing the end result for TASK™️

- High scores indicate high ability. For example, if a person answers many difficult questions correctly, their ability will be estimated as high.

- Low scores indicate low ability. If a person answers many easy questions incorrectly, their ability will be estimated as low.

- Mid-range scores could indicate average ability, but the specifics depend on the difficulty of the items that were answered correctly and incorrectly. Two people could have the same total score but different estimated abilities if one person answered harder items correctly and easier items incorrectly, while the other person did the opposite.

The overall score is an average of the ability scores calculated for each Matrix Item

In the context of IRT, "ability" refers to the latent trait of a person, which can't be directly observed, but is inferred from their responses to a set of items or questions.

For example, in a mathematics test, the ability would refer to the person's mathematical knowledge or skills.

A person's ability is estimated based on their pattern of responses to the items on a test. In the 2-parameter logistic (2PL) model applied each item has two parameter: difficulty and discrimination of ability. The difficulty parameter corresponds to the ability level at which a person has a 50% chance of correctly answering the item. If a person answers a hard item correctly, it suggests that they have high ability, and vice versa.

Interpreting scores in terms of ability depends on the specific IRT model being used, but generally:

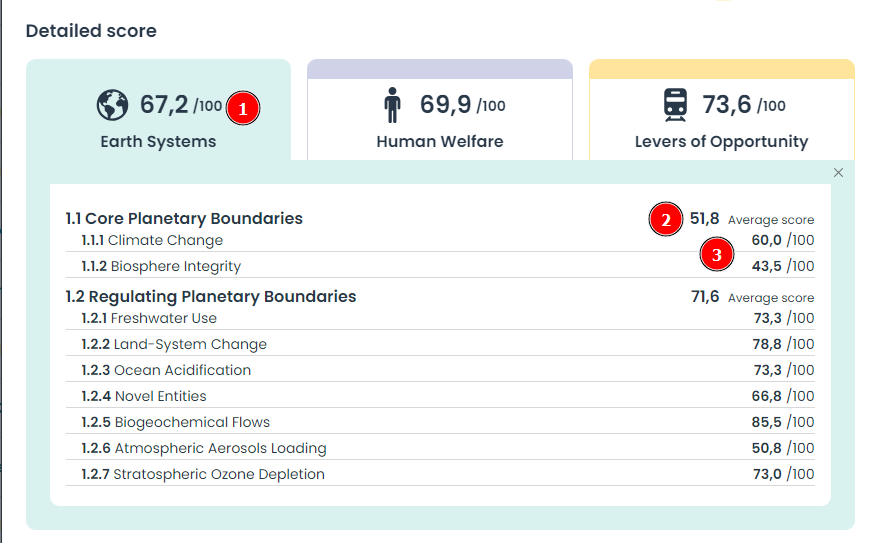

Detailed score

- This is the average over Framework (ex: Earth Systems)

- This is the average over one Domain of the Framework

- This is the average over one Subject of the Domain

Resources

- Matrix